How does data flow execution work?

The term "data-flow" encompasses a lot of different types of execution. You might hear about "synchronous dataflow", or "process networks". LabVIEW uses "structured dataflow". Each node on a LabVIEW diagram has a set of inputs and a set of outputs. LabVIEW schedules each node to execute when one item of data has arrived on all of its inputs. The execution of the node generates all of the outputs which then trigger the following nodes to run. There is no queueing on wires unless you explicitly put a queue primitive node on the diagram. Looping (using structures such as For-loops and while-loops) are explicit rather than being implicit like in some other languages.

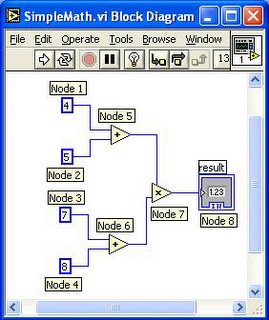

So how do you implement something like this? First, it depends on the hardware you are running on. As you can see, there are a number of things that can run simultaneously. Nodes 1, 2, 3, and 4 can all run in parallel with each other since they have no dependencies. Nodes 5 and 6 can run in parallel since they don't have any dependencies. In a processing environment that supports parallelism, you could run a lot of the diagram in parallel. Node 7 is a synchronization point. It needs to wait for two different parallel operations (node 5 and node 6) to complete before it can continue.

How efficiently you can run this diagram will depend on three things

- How much parallel hardware is available. Can the hardware perform two adds in parallel?

- The overhead of scheduling each operation

- The overhead of synchronizing at Node 7

Now, one thing to notice is that computations are not particularly sensitive to the outside world. For example, if you can't execute node 5 and node 6 in parallel, it really doesn't matter the order in which you execute the instructions. You get the same answer and the operation takes the same amount of time if you do the node 5 add and then the node 6 add or vice versa. Thus, LabVIEW forms "clumps" of instructions which are all basically sequential and then only does scheduling when a clump of instructions is done (using something more efficient than the table lookup by the way). So, the LabVIEW compiler might emit something like

- Put "4" in register 1

- Put "5" in register 2

- Put "7" in register 3

- Put "8" in register 4

- Add registers 1 and 2 and store it back in register 2

- Add registers 3 and 4 and store it back in register 4

- Multiply registers 2 and 4 and store it back in register 2

- Store register 2 in memory somewhere

- Look for the next clump to run

Now, it so happens that this example is so small, that the folks at Intel and IBM do give us some parallelism. A Pentium has multiple instruction units. When executing this sequential set of instructions, it sees that the first sets of instructions use different registers. Thus, the operations to put numbers into registers will likely occur in parallel. It might only take 1 clock cycle to execute the first 4 instructions because they don't interfere with each other. Likewise, instructions 5 and 6 operate on different sets of registers and there are multiple adders inside a pentium. Thus, those two instructions will probably be executed in parallel in the next clock cycle. Instruction number 7 is where things get interesting. It can't execute until both 5 and 6 have completed. Thus, it can't execute in parallel with instructions 5 and 6. (In fact, on some older CPUs, there might be a multiple clock delay while it waited for instructions 5 and 6 to complete). Similarly, instruction 8 can't execute until instruction 7 is complete.

So, even on a single CPU machine, you can get some small amount of parallelism. As always, thank your Intel engineer for this.Ok, so this article is getting long so I'll stop there. If you're a LabVIEW programmer, you hopefully understand a little better how things work under the hood. If you're a student of computer architecture or parallel programming (as I once was), always pay attention to the overhead of distributing your workload because it may swamp any savings you hope to gain.

0 Comments:

Post a Comment

<< Home